Fondation Jérôme Seydoux-Pathé, Paris – Architect: Renzo Piano

Founded in 2006, the Jérôme Seydoux-Pathé Foundation is an institution in Paris dedicated to the history of Cinema housed in an iconic building by Renzo Piano

Fondation Louis Vuitton, Paris – architect: Frank Gehry

The Louis Vuitton Foundation is a museum in Paris supported by French luxury conglomerate LVMH and designed by Canadian-born American architect Frank O. Gehry

Matti Suuronen’s Futuro House

Futuro is an esperimental, egg-shaped, fiberglass house designed by Finnish architect Matti Suuronen in the late 1960s

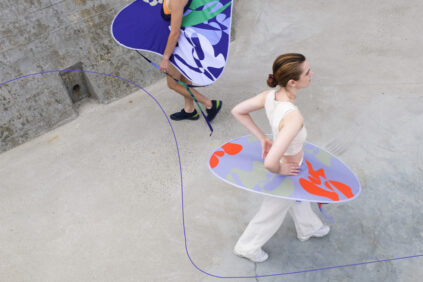

18th Venice Architecture Biennale, 2023 – The best pavilions

The best pavilions and exhibitions at the 18th Venice Architecture Biennale curated by Lesley Lokko and open until November 2023

The Vitra Museum explores the past and future of modern gardens

In an age of climate crisis, social injustice, and biodiversity under threat, the garden offers a place in which to reimagine the future.

COBE’s kindergarten in Copenhagen is a fairytale village

Designed by Danish architectural firm COBE, a kindergarten in Frederiksberg stands apart for the deep integration between its architecture and educational concept

Visionary projects for an energy-sustainable future at the Vitra Design Museum

At the Vitra Design Museum, the exhibition ‘Transform! Designing the Future of Energy’ focuses on how design can shape a society based on renewable energy

2023 Loewe Craft Prize finalists announced

The Loewe Foundation announced that the 30 finalist works will be exhibited at the Noguchi Museum in New York, starting May 17

Swedish office Front creates a furniture collection designed by nature

Swedish design office Front – founded by Sofia Lagerkvist and Anna Lindgren – has designed a new, intriguing collection of seating furniture inspired by nature

When did they get into our homes? A brief history of houseplants

How and when did plants enter our homes? From the 17th century to the present day, a brief history of our relationship with houseplants

Innovative bike helmet is made of bone-inspired 3D printed latticework

Chinese designers have recently developed a bike helmet, called Voronoi, which combines parametric modeling, bionics, and 3D printing

Rethinking the world. Radical design in an age of crisis

10 projects from ‘Design In An Age Of Crisis’, an initiative by the London Design Biennale aimed to foster post-pandemic innovative design through radical thinking

Mark Rothko at the Fondation Louis Vuitton in Paris

Arguably the largest exhibition ever on the artist, ‘Mark Rothko’ at the Fondation Vuitton in Paris presents an exceptional ensemble of 115 paintings by Rothko

Luzinterruptus’ Nonconsuming Shining Christmas Trees

With their latest project, Spanish collective Luzinterruptus creates ‘Nonconsuming Christmas Trees’ by parasitizing existing streetlights

Uffe Isolotto’s We Walked the Earth, Pavilion of Denmark, Venice Art Biennale 2022

At the Art Biennale 2022, Denmark presents a powerful installation by Uffe Isolotto that brings us into a Gothic Nordic fairy tale populated by centaurs

Australia Pavilion, Marco Fusinato, Desastres – 59th Venice Art Biennale 2022

At the Venice Art Biennale 2022, the Australia Pavilion features ‘Desastres’, a loud and hypnotic performance/installation/live performance by Marco Fusinato

Maria Eichhorn investigates the hidden history of the German Pavilion in Venice

By removing parts of the German Pavilion at the Venice Biennale’s Gardens, Maria Eichhorn unveils the renovation the building underwent during the Nazi period

The 2022 Venice Art Biennale main exhibition at Giardini & Arsenale

Curated by Cecilia Alemani, the 2022 Venice Biennale International Art Exhibition – The Milk Of Dreams features works by 213 invited artists, most of whom are women

copyright Inexhibit 2024 - ISSN: 2283-5474